Istio Hands-on

Manage Microservice Communication with Kubernetes and Istio Service Mesh

Content:

| Overview |

|---|

| 1. Create your Cloud environment |

| 2. Setup your work environment |

| 3. Install the Cloud Native Starter sample app |

| 4. Telemetry |

| 5. Traffic Management |

| 6. Secure your services |

Exercise 4: Telemetry

Challenges with microservices

We all know that a microservice architecture is the perfect fit for cloud native applications and it increases the delivery velocities greatly. But envision you have many microservices that are delivered by multiple teams, how do you observe the overall platform and each of the services to find out exactly what is going on with each of the services? When something goes wrong, how do you know which service or which communication among the services are causing the problem?

Istio telemetry

Istio’s tracing and metrics features are designed to provide broad and granular insight into the health of all services. Istio’s role as a service mesh makes it the ideal data source for observability information, particularly in a microservices environment. As requests pass through multiple services, identifying performance bottlenecks becomes increasingly difficult using traditional debugging techniques. Distributed tracing provides a holistic view of requests transiting through multiple services, allowing for immediate identification of latency issues. With Istio, distributed tracing comes by default. This will expose latency, retry, and failure information for each hop in a request.

You can read more about how Istio mixer enables telemetry reporting.

Check if Istio is configured to receive telemetry data

-

Verify that the Grafana, Prometheus, Kiali and Jaeger (jaeger-query) add-ons were installed successfully. All add-ons are installed into the

istio-systemnamespace.kubectl get services -n istio-systemOf course they are enabled, they are part of the demo profile used to install Istio in this workshop.

-

In the previous exercise you used the

show-urls.shscript to display information on how to access the example. This included commands for the Kiali, Prometheus, Grafana, and Jaeger dashboards.If necessary, simply rerun the command:

./show-urls.sh -

Open a second Cloud Shell session. Click on the ‘+’ next to the ‘Session 1’ tab.

-

Change into the istio-handson/deployment/ directory.

Grafana

Grafana is an open source analytics and monitoring service.

-

Execute the port-forward command in Session 2:

kubectl port-forward svc/grafana 3000:3000 -n istio-systemResult should indicate forwarding from port 3000 to port 3000.

-

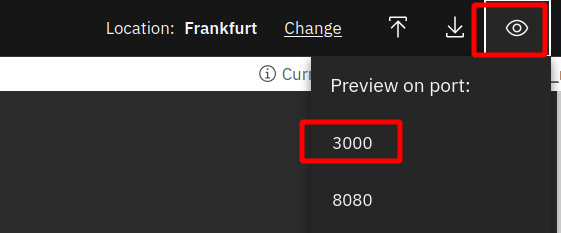

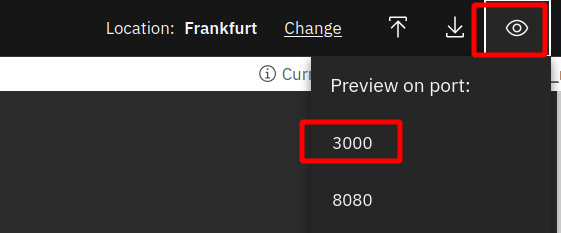

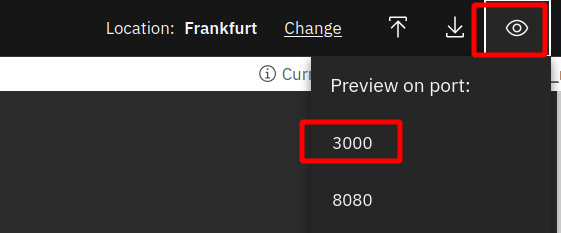

Click on the “Eye” icon in the upper right corner of Cloud Shell and select port 3000.

This will open a new browser tab with the Grafana dashboard.

-

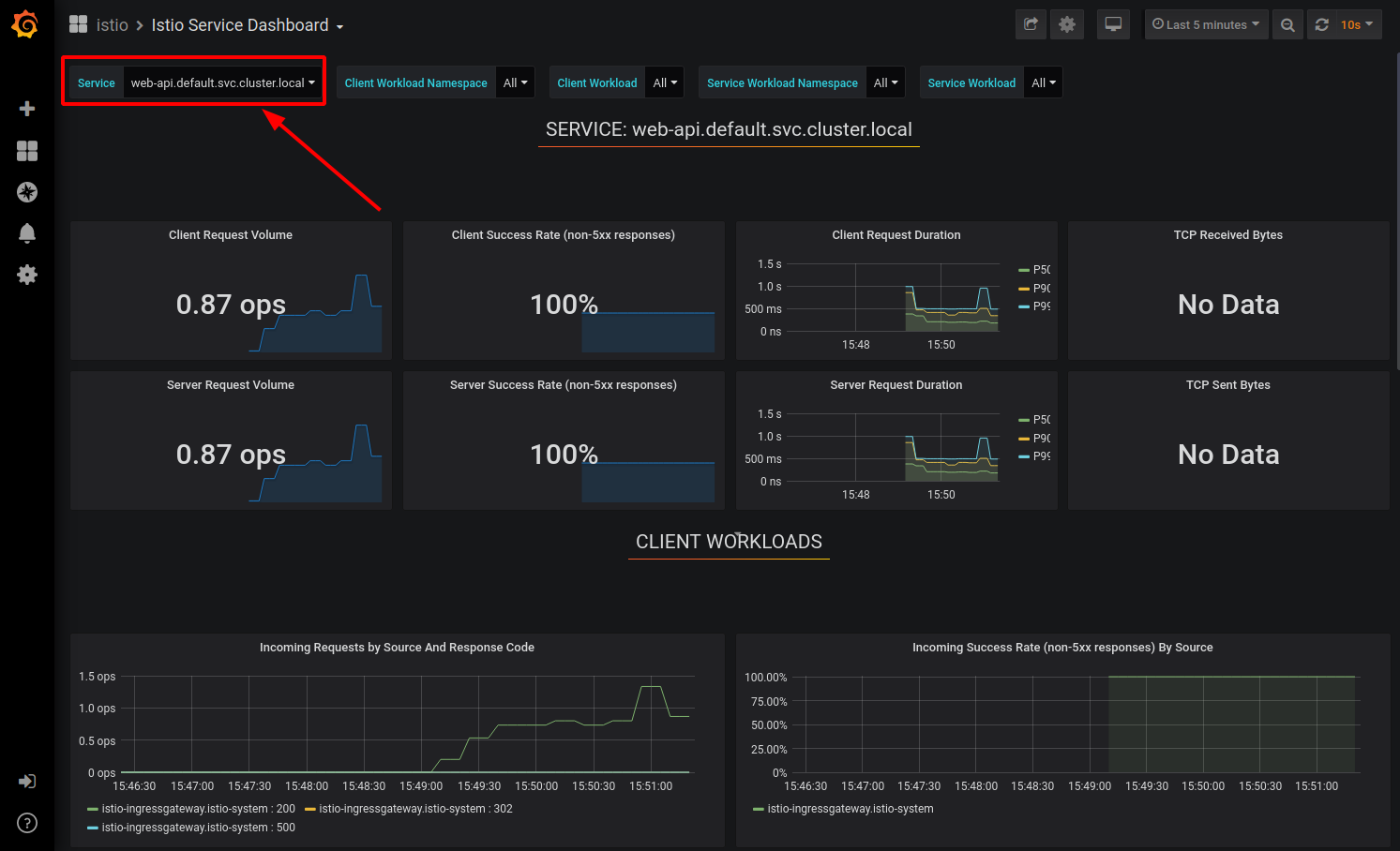

Click on Home -> Istio -> Istio Service Dashboard.

-

Execute the getmultiple API of the Web-API service several times, either through the API Explorer or with the curl command as given by

show-urls.shin Session 1. -

In the Grafana Istio Service Dashboard, select Service “web-api.default.svc.cluster.local” (which is our Web-API service):

You may need to create more load on the API.

This Grafana dashboard provides metrics for each workload. Explore the other dashboard provided as well.

Prometheus

Prometheus is an open-source systems monitoring and alerting toolkit.

If you want to display application specific metrics, Prometheus needs to be configured. We have an example in the Cloud Native Starter repository.

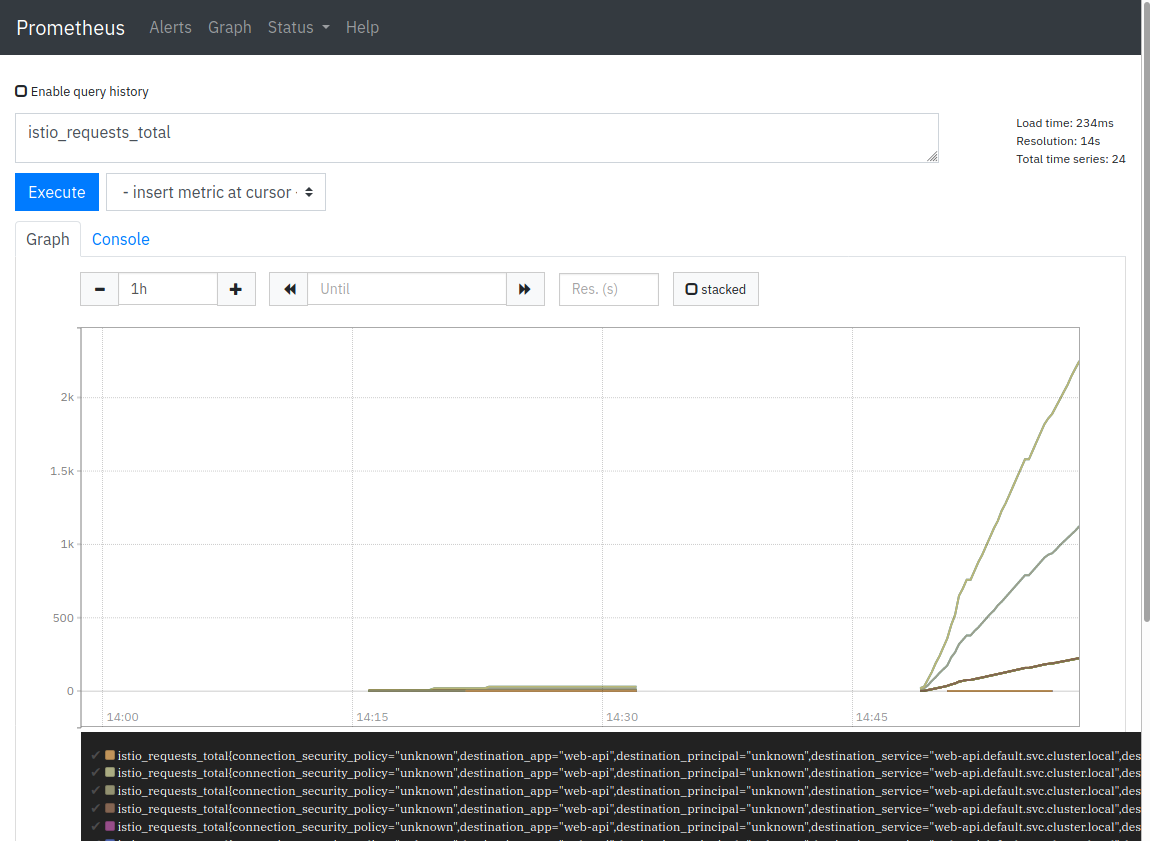

In this demo you will display some Istio metrics (istio_requests_total).

-

Execute the port-forward command in Session 2:

kubectl port-forward svc/prometheus 3000:9090 -n istio-systemResult should indicate forwarding from port 3000 to port 9090.

-

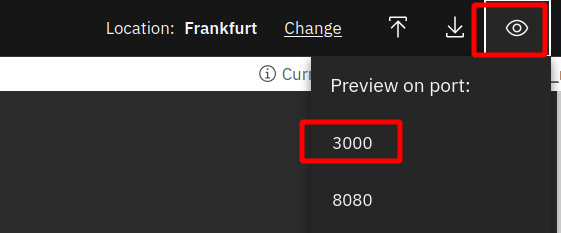

Click on the “Eye” icon in the upper right corner of Cloud Shell and select port 3000.

This will open a new browser tab with the Prometheus dashboard.

-

In the query field, enter ‘istio_requests_total’.

-

Click on ‘Execute’ and select the ‘Graph’ tab.

Jaeger

In a monolithic application, tracing events is rather simple compared to tracing in a microservices architecture. What happens when you make a ‘getmultiple’ REST call to the Web-API service?

- The Web-API makes a REST call to the Articles services, requesting 5 or 10 articles.

- The Articles service returns the data for the articles, including the authors name.

- The Web-API service then makes a REST call to the Authors service, requesting information about the author.

- The Authors service returns the Twitter ID and Blog URL for the author. There will be 5 or 10 calls, one for each article.

- The Web-API service combines this information and sends a JSON object of 5 or 10 articles back to the requestor.

In this simple example we already have 3 services. Imagine an application that is more complex and where the services are scaled into multiple replicas for availability and response times. We need a tool that is able to trace a request through all this distributed invocations. One of these tools is Jaeger and it is installed in our Istio setup. A Jaeger in German is a hunter and they are used to search traces …

-

Execute the port-forward command in Session 2:

kubectl port-forward svc/jaeger-query 3000:16686 -n istio-systemResult should indicate forwarding from port 3000 to port 16686.

-

Click on the “Eye” icon in the upper right corner of Cloud Shell and select port 3000.

This will open a new browser tab with the Jaeger dashboard.

-

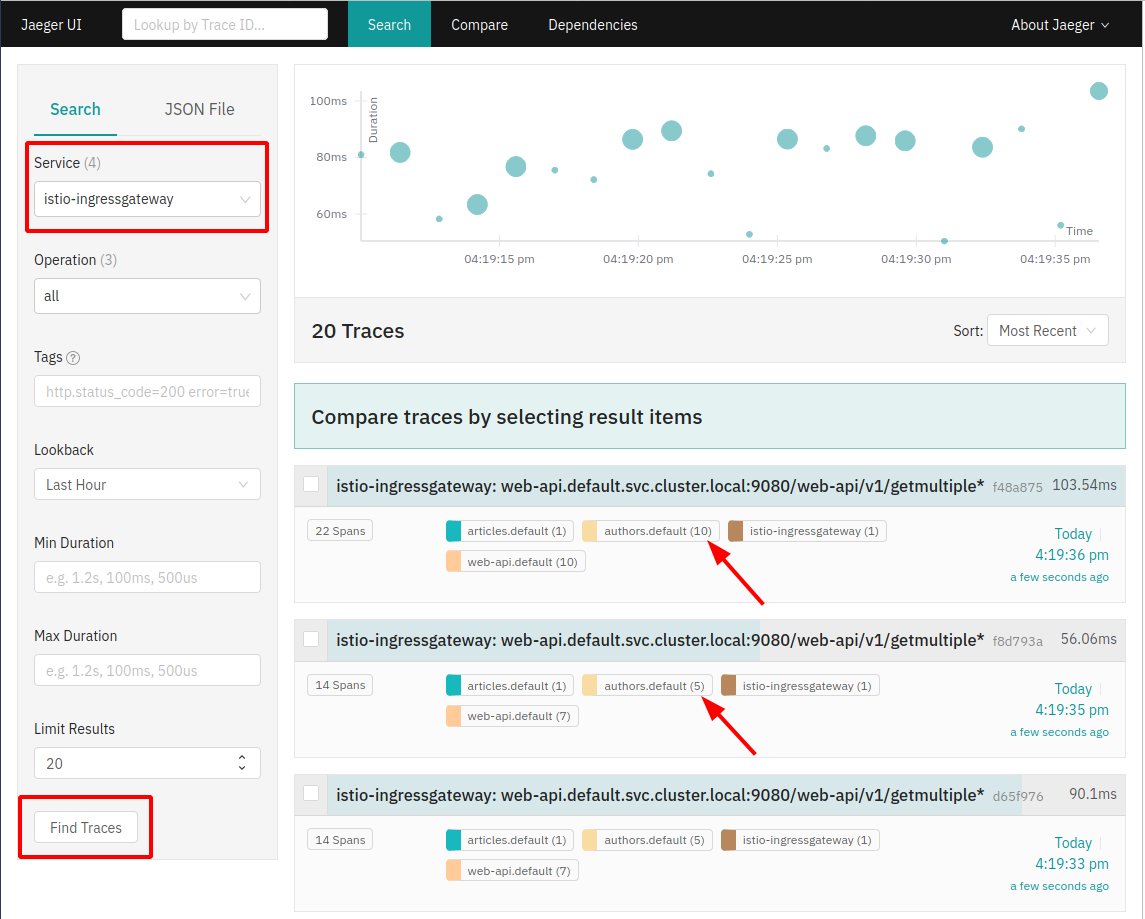

In the Service pulldown, select ‘istio-ingressgateway’.

-

Click ‘Find Traces’.

In the trace list you can see traces with 10, and traces with 5 requests to the Authors service.

-

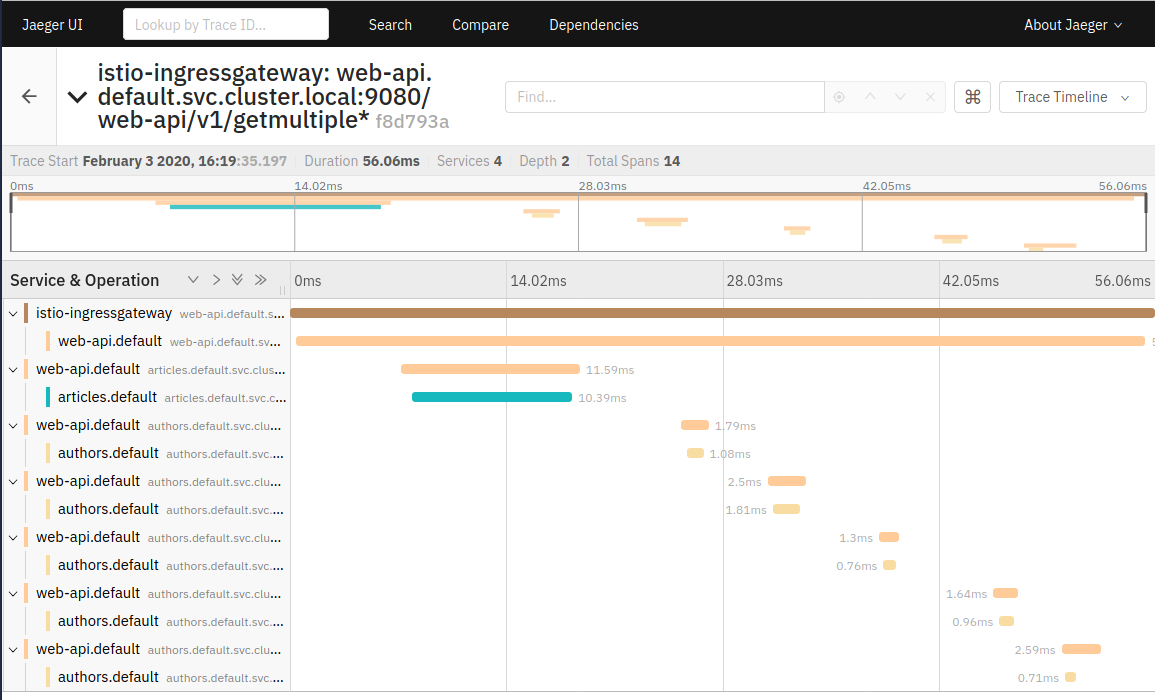

Select one of the traces:

You can now see the details about the trace spans as the request is routed through the services.

The Web-API (and Articles) service are written using Eclipse MicroProfile. MicroProfile takes care of default trace spanning, managing of parent and child spans, and passing of trace headers without any user (programmer) intervention. Nice!

Kiali

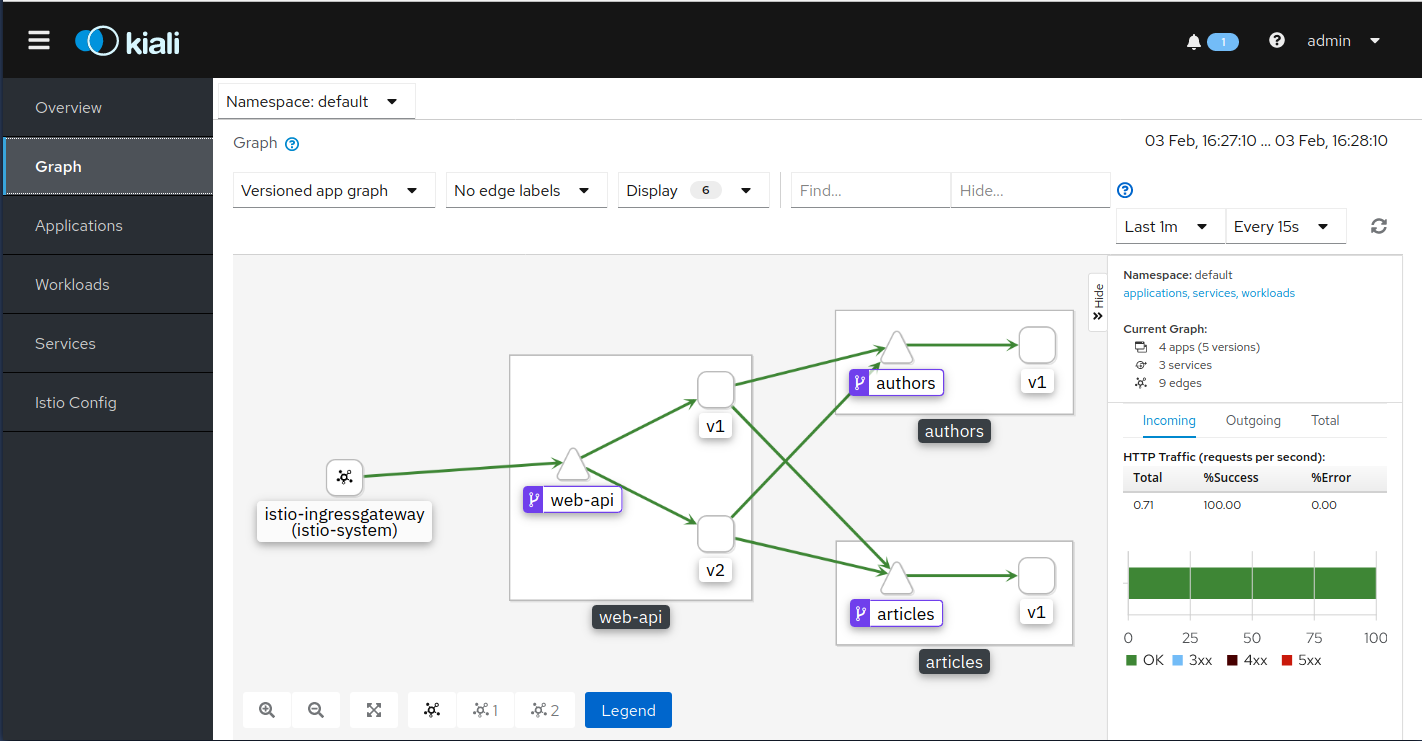

“Kiali is an observability console for Istio with service mesh configuration capabilities. It helps you to understand the structure of your service mesh by inferring the topology, and also provides the health of your mesh.”

-

Execute the port-forward command in Session 2:

kubectl port-forward svc/kiali 3000:20001 -n istio-systemResult should indicate forwarding from port 3000 to port 20001.

-

Click on the “Eye” icon in the upper right corner of Cloud Shell and select port 3000.

This will open a new browser tab with the Kiali dashboard.

-

Log in with ‘admin/admin’.

-

Open the ‘Graph’ tab and select the ‘default’ namespace.

This is shows the components of your microservices architecture. Explore the other tabs.

Keep the Kiali dashboard open, we will use Kiali in the next exercise about Traffic Management.

What about logging?

Logging is an important function but not really part of Istio. If you are interested in a central logging service (LogDNA), there is an Optional Exercise 4a: Logging.